Graph-Sturctured Sum-Product Networks (GraphSPN)

Kaiyu Zheng^, Andrzej Pronobis*^, Rajesh P. N. Rao^

^ University of Washington, Seattle, WA, USA.

* KTH Royal Institute of Technology, Stockholm, Sweden.

Graph-structured data appear in a wide range of domains, from social network analysis, to computer vision and robotics. Often, the global structure of such data varies, yet dependencies captured by elements of the structure persist and can serve as a powerful source of information for various inference tasks. While many approaches to structured prediction place strict constraints on the interactions between inferred variables, many real-world problems can be only characterized using complex graph structures of varying size, often contaminated with noise when obtained from real data. We introduce Graph-Structured Sum-Product Networks (GraphSPNs), a probabilistic approach to structured prediction for problems where dependencies between latent variables are expressed in terms of arbitrary, dynamic graphs.

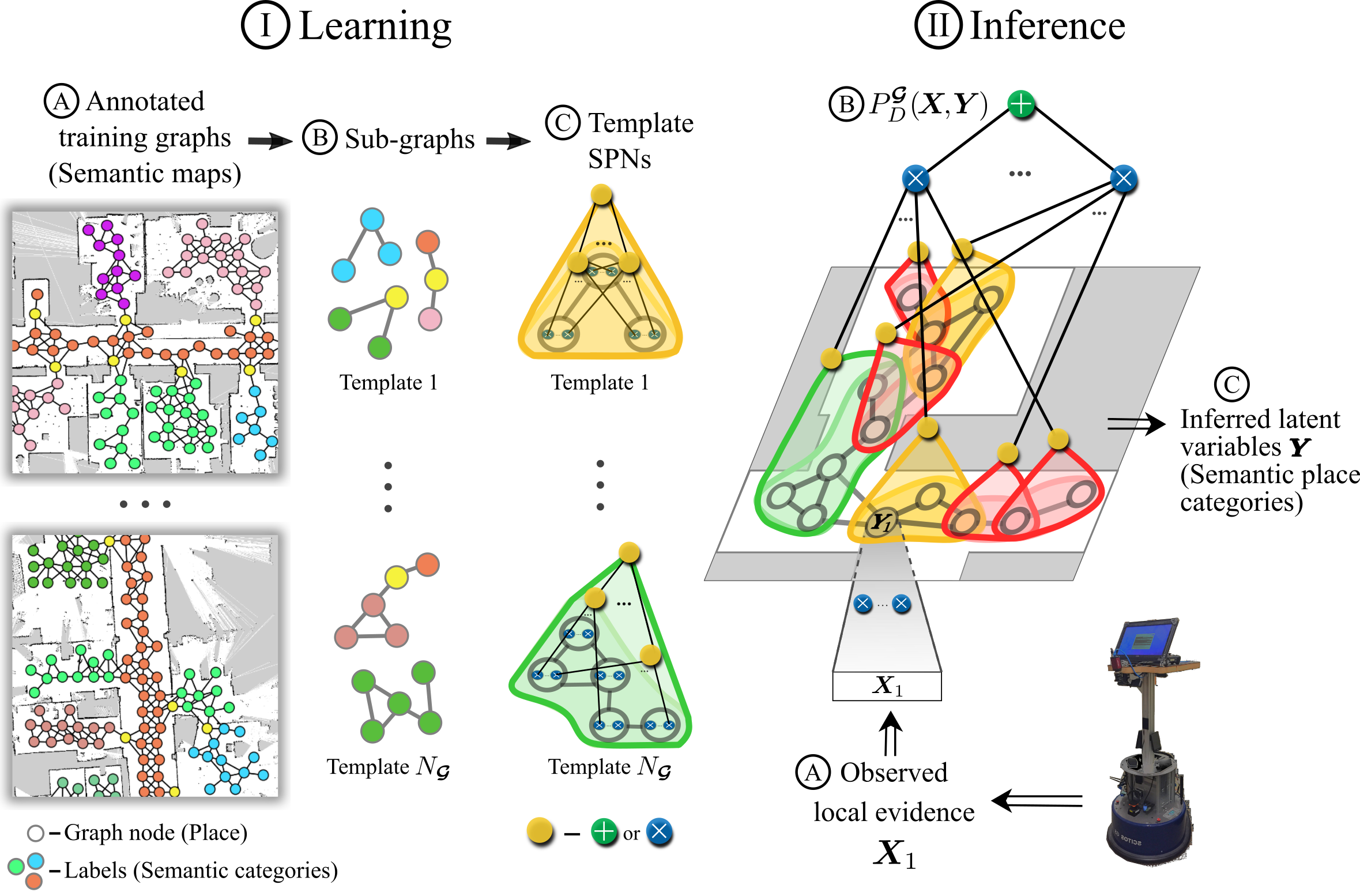

Our framework builds on Sum-Product Networks (SPNs), a recent probabilistic deep architecture with solid theoretical foundations. SPNs can learn probabilistic models capable of representing context-specific independence directly from high dimensional data and perform fast, tractable inference on high-treewidth models. As illustrated in the figure below, the learning and inference procedures of GraphSPNs are quite intuitive. GraphSPNs learn template SPN models representing distributions over attributes of sub-graphs of arbitrary complexity. Then, to perform inference for a specific, potentially expanding graph, they assemble a mixture model over multiple decompositions of the graph into sub-graphs, with each sub-graph modeled by an instantiation of an appropriate template. For more details, please refer to our AAAI'18 paper.

Motivation from Robotics

We developed this method during our research on semantic mapping in robotics. while exploring their environments, robots build a growing body of knowledge captured at different spatial locations, scales, and at different levels of abstraction. Such knowledge can be represented by semantic maps, which are semantically annotated topological graphs grounded onto metric maps (see video below).

In order to integrate the collected spatial knowledge, resolve ambiguities, and make predictions about unobserved places, semantic mapping frameworks often employ structured prediction algorithms. Unfortunately, the relations discovered by a robot exploring a real-world environment tend to be complex and noisy, resulting in difficult inference problems. At the same time, topological graphs are dynamic structures, growing as the robot explores its environment, and containing a different number of nodes and relations for every environment. Therefore, we aimed to develop an approach to address these challenges, which led to GraphSPN, a general probabilistic framework for modeling graph-structured data with complex, noisy dependencies between a varying number of latent variables.

In our IROS'19 paper, we introduced TopoNets, which is based on both GraphSPN for modeling the environment's topological structure, as well as a SPN-based method (named DGSM), which classifies laser range observations into semantic place categories (e.g. office, corridor, kitchen etc.). TopoNets is a unified semantic mapping architecture that spans multiple levels of abstraction and spatial scales, from pixels (local place geometry) to semantics of buildings. Although GraphSPN was originally evaluated only with synthetic data, its practicality was demonstrated as experiments with TopoNets were conducted with a dataset of real-world robot observations, the COLD database.

Code

The code of GraphSPN can be found on github. Please follow instructions in documentation. Currently GraphSPN only supports templates where the data are stored on the nodes and not the edges, and the data must be discrete values. Contribution to extend GraphSPN's capabilities and applications are more than welcome.

Getting Started

Install tensorflow 1.8.0

Due to the compatibility with libspn, currently the tested version of tensorflow is at 1.8.0. Please refer to these instructions to install tensorflow. If you could test with other versions of tensorflow, and describe your experience or even provide a fix, that would be much appreciated.

Install libspn

- Clone the libspn repository:

git@github.com:zkytony/libspn.git - Make sure that you are on the

graphspnbranch. - Follow these instructions to install libspn.

Install graphspn

- Clone the graphspn repository:

git@github.com:zkytony/graphspn.git - In the terminal,

cdinto thegraphspndirectory - Run

pip install -e .to install the python package. - Now when you open a Python interactive session, you should be able to

import graphspn. - To test if things are working properly, run the script to reproduce AAAI'18 results as described in the following section.

Reproduce AAAI'18 results

The respository includes the data and scripts to reproduce the accuracy results of GraphSPNs in disambiguating noisy semantic classification experiment (the red curve in figure 5). To do this, go to graphspn/experiments/scripts and run

python reproduce_aaai2018.py Freiburg|Saarbrucken|Stockholm

The script uses the given building (either Freiburg, Saarbrucken, or Stockholm) for testing, and the other two for training. The script doesn't plot the curve and instead just outputs the accuracy results on the terminal. It completed in about 5 minutes on my computer (Ubuntu 16.04, GTX 1070 GPU, Intel i7 CPU and 16 GB RAM). If it is the first time you run this script, training of template SPNs may take several minutes. After the script completes, you should see a summary that looks similar to:

{'1PO': [893, 911, 0.9802414928649835],

'2PO': [1247, 1267, 0.984214680347277],

'BA': [167, 171, 0.9766081871345029],

'CR': [1524, 1538, 0.9908972691807543],

'DW': [372, 460, 0.808695652173913],

'KT': [564, 603, 0.9353233830845771],

'LAB': [868, 882, 0.9841269841269841],

'LO': [61, 70, 0.8714285714285714],

'MR': [110, 110, 1.0],

'UT': [750, 773, 0.9702457956015524],

'_overall_': 0.9653308060698609,

'_overall_by_class': 0.9501782015943115,

'_stdev_': 0.01679410293196196,

'_stdev_by_class': 0.059072659838922986,

'_total_correct_': 6556,

'_total_inferred_': 6785}

Because the experiment is not deterministic, the results may vary slightly.

You can change the hyper parameters or experiment settings by editing the reproduce_aaai2018.py script. All such configurations are listed at the top of the file, with self-explanatory names or comments. after the imports. For example, you can change the NOISE_LEVEL variable to be another value between 0 and 5 (indicating noise level 1 to 6 in table 1).

Citation

@inproceedings{zheng2018aaai,

author = {Zheng, Kaiyu and Pronobis, Andrzej and Rao, Rajesh P. N.},

title = {Learning {G}raph-{S}tructured {S}um-{P}roduct {N}etworks for Probabilistic Semantic Maps},

booktitle = {Proceedings of the 32nd AAAI Conference on Artificial Intelligence (AAAI)},

year = 2018,

address = {New Orleans, LA, USA},

month = feb,

archivePrefix = {arXiv},

primaryClass = {cs.LG},

eprint = {1709.08274}

}

Resources

Video explanation of the paper:

A shorter, better animated explanation of TopoNets (useful for understanding GraphSPN too):